“The development of full artificial intelligence could spell the end of the human race. Once humans develop artificial intelligence, it will take off on its own and redesign itself at an ever-increasing rate. Humans, who are limited by slow biological evolution, couldn’t compete and would be superseded” (Stephen Hawking)

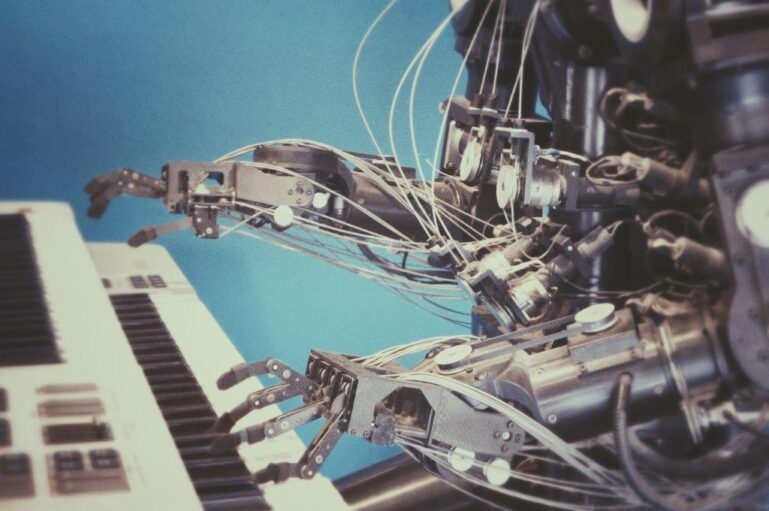

Artificial Intelligence (AI) leverages computers and machines to mimic the problem-solving and decision-making capabilities of the human mind. John McCarthy (Standford University, 2004) offered the following definition of AI: “It is the science and engineering of making intelligent machines, especially intelligent computer programs. It is related to the similar task of using computers to understand human intelligence, but AI does not have to confine itself to methods that are biologically observable”. At its simplest form, artificial intelligence is a field which combines computer science and robust datasets to enable problem-solving. It also encompasses sub-fields of machine learning and deep learning, which are frequently mentioned in conjunction with artificial intelligence. These disciplines are comprised of AI algorithms which seek to create expert systems which make predictions or classifications based on input data. While machine learning is dependant on human intervention to learn (like data input), deep learning is scalable with algorithms automating much of the feature extraction piece of the process and enabling the use of large data sets. IBM suggests that a deep-learning algorithm can “ingest unstructured data in its raw form (e.g. text, images) and it can automatically determine the hierarchy of features which distinguish different categories of data from one another”.

Positively, this has huge application in a variety of fields:

- Speech recognition – the ability to translate voice-to-text or vice-versa, using Natural Language Processing. Many devices are now using this feature (e.g. Siri, Cortana, amongst others).

- Digital vision – the ability to derive meaningful information from digital images, videos and other visual inputs and, based on those inputs, take action.

- Customer service – online chatbots answer frequently asked questions, provide personalised service, suggest cross-selling options and even sizes of garments, etc.

- Marketing – based on the above “customer service” category, fashion trends and a variety of other inputs and data sets, business is able to predict what consumers are going to buy in the future and market accordingly.

- Health care – a vast variety of applications in all aspects, from diagnosing, prescribing, monitoring, and predicting (the latter been used extensively during the current coronavirus pandemic).

- Avionics, weather, climate, stock exchange, gaming, politics, The list is endless.

Negatively, the question needs to be asked: “Is artificial intelligence thinking too deeply?” Have we created a very sophisticated and intelligent “Pacman” that will eventually “eat” or “gobble up” humanity as we currently know it? There are many worrying features of AI:

- Deep learning (self-learning and developing) algorithms are set a goal to not only be effective at predicting a certain outcome, but also to develop themselves to be more effective over time. Simply put, if I am wanting directions from Paris to London by road and ferry, the algorithm is designed to calculate all possible routes (and even potential barriers, like traffic flow, weather conditions, available fuel stops, etc.) to predict the best route – clearly a route that takes one via Rome is neither efficient nor effective. As algorithms grow in understanding and take more control, they could theoretically shut down vehicles if the driver doesn’t comply with the suggested route (like turn off the car if the driver wants to take a more scenic alternative).

- Algorithm reinforcement learning (goals that are implicitly induced by rewarding some types of behaviour or punishing others) can be used malevolently and cause great harm. For example, how is someone able to call me from another continent attempting to sell me into a dubious scheme and the person knows my name, income bracket and personal preferences? The provision of huge data bases (often stolen) and other social media information enables these algorithms to “learn” very quickly and pass this learning into the wrong hands.

- A lack of common-sense reasoning in AI can induce mistakes that normal human beings seldom make, with disastrous effects. For example, self-driving vehicles cannot reason about the intentions of pedestrians in the exact way that humans do and instead must use non-human modes of reasoning to avoid accidents. Humans instinctively know to slow down in an area with many tourists realising that the tourists are looking around at the sights without being fully aware of the traffic.

- Machine ethics, enabling machines to function in ethically appropriate ways through their own ethical decision-making processes, is a hot, but yet unresolved topic, especially as machines become more autonomous. In contrast to computer hacking, software property issues, privacy issues and other topics normally ascribed to computer ethics, machine ethics is concerned with the behaviour of machines towards human users and other machines. Political scientist Charles T. Rubin believes that AI can be neither designed nor guaranteed to be benevolent. He argues that “any sufficiently advanced benevolence may be indistinguishable from malevolence.” Humans should not assume machines or robots would treat us favourably because there is no a priori reason to believe that they would be sympathetic to our system of morality, which has evolved along with our particular biology (which AI would not share). Hyper-intelligent software may not necessarily decide to support the continued existence of humanity and would be extremely difficult to stop.

Are machines ruling the world? The human being’s natural desire to research and find better ways of doing things (positively) and the human being’s quest for controlling power and subsequent malevolent bent (negatively) seem to be driving the development of algorithms within AI that will be autonomous and ultimately ruling. There are many that believe that AI is thinking too deeply and will stand on the toes of our privacy, human rights and social/cultural frameworks.

Regulation has been hailed as the cure for the above and many (Thierry Breton | LinkedIn, European Commissioner for Internal Market, who I respect, and others In the European Union and elsewhere in the world) are doing their best, whilst promoting development, to get systems and laws in place to protect civilisations from abuse, extortion and other forms of domination. Regulation and law enforcement all over the world, however, has been quite unsuccessful on the whole to protect us from issues that are just located in the tip of the iceberg – spamming, unsolicited e-mail campaigns, cyber fraud, etc. How are they going to regulate man’s quest for digital supremacy and, even more frighteningly, AI’s quest for control and autonomy?

Are machines ruling the world – not yet perhaps, but AI, on account of those who write the algorithmic code, seems to be moving in that direction. An artificial intelligence that keeps on redesigning itself to take more control would be difficult to stop and could end civilisation as we know it.